Cloud Pak Deployment Guide

The purpose of this guide is not to be the be all and end all guide, but more of a path towards a successful deployment of Cloud Pak for X. There are deeper questions, but this was written for 90% of the deployments not the 10%. As time progresses, this platform will become more and more automated and more cohesive across Cloud Paks. This guide has come from many deployments and the finding that we would like to share with you. We don’t touch on tethering, multi-tenancy, Disaster Recovery or exposing services via routes vs pointing to a hostname and port in this guide. This may come in the future.

- Cloud Pak Deployment Guide

Presale: Skills, architecture and sizing

Verify Skills

You will want to perform an inventory check on the teams skills to deploy this solution. While it may seem a little daunting at first, everything that is new is. Read through the documentation, take some training and try this out somewhere first. Once you have performed an installation one time, the next is less scary. Upgrades become as easy as RHEL, your team is just learning a new skill set. It will take some time and patience, but once you have it down, it will become second nature.

- Linux:

- OpenShift 4.x

- Storage:

- Cloud Pak:

- Hardware:

- Virtualization technologies

- Intel/Power

- Cloud Provider Skills:

Sizing

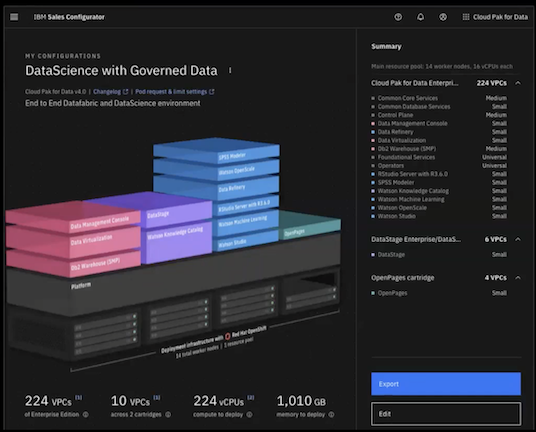

Send out a reminder to the reseller or client prior to purchase that the following has been thought through and reviewed. We don’t want any surprises after the deal closes and you are looking to get this deployed. Really think through the use case you are sizing here. Consider adding 10% to this number as many underestimate the usage of the platform. The Sales Configurator is designed to give you a total amount of licensing VPCs and Physical compute needed for the compute nodes, however it will not recommend how you architect that compute nor does it recommend the needed amount of storage.

Example: If you are using Cloud Pak for Data and the use case entails Jupyter notebooks or machine learning models, how many CPUs will those runtimes need to execute? This will play into the hardware architecture as well. If your notebook requires 16 cores to execute and your node architecture is 16vCPUx64GB RAM machine flavors, where will your notebooks execute. Each node has other pods on it. You will need more compute dense nodes, such as 32vCPUx128GM RAM vs leaner nodes such as 16vCPUx64GB RAM.

All Cloud Paks will be part for the Cloud Pak Sales Configurator, currently it is only Data (also only IBM and PartnerWorld Partner access).

NOTE: If you do not have access to the Sales configurator, please click the link to gain access. Once submitting your should receive a message back saying “Thank you for your application. Administrators have been notified and will review it shortly. Once you’re approved, you’ll be able to return here and access Sales Configurator.” This is currently a manual approval process and could be 24/48 hours before you gain access. If you have any issues, please email.

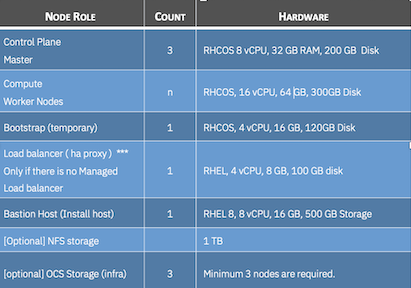

Here is a best practice chart on production nodes and sizes. The flavor of the compute node will vary based on Cloud Pak.

Architecture

High Availability

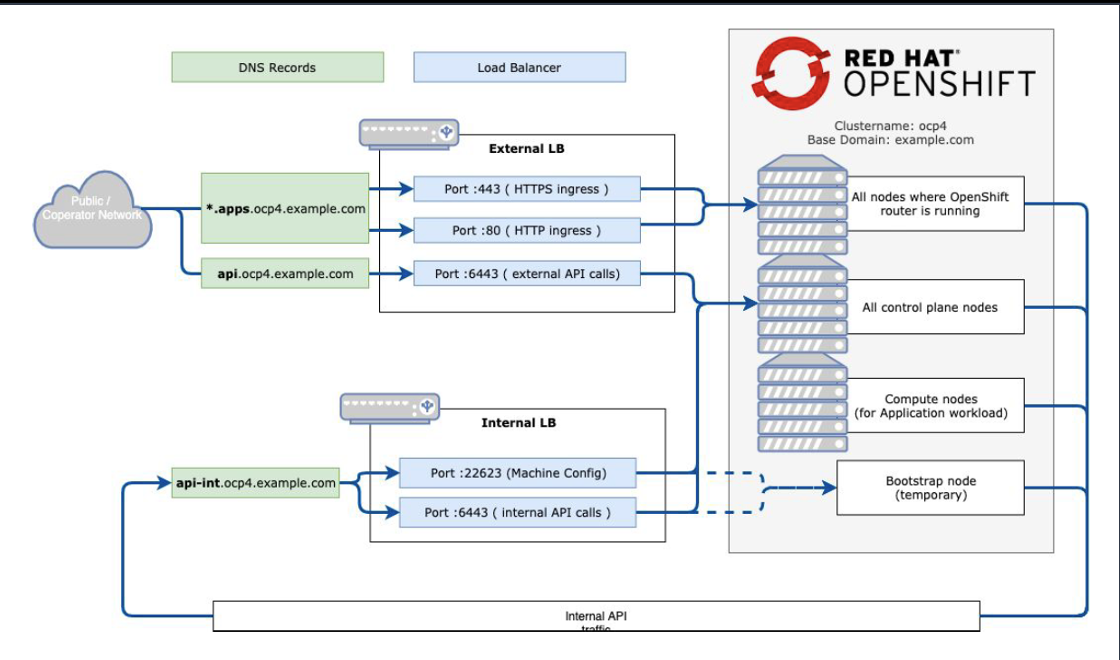

Something to think about is high availability (HA). One might say, we are running a Kubernetes environment which gives us HA by default. This is true, until a network link goes down or power to a data center region. A more resilient architecture is a Stretch Cluster where you stretch the controller and compute nodes across separate infrastructure regions. With Public Cloud providers these are generally call Availability Zones

- Data Center stretched cluster, where different areas of the data center have separate power, network, etc.

- Metro stretched Cluster where you could stretch this across multi buildings, but we don’t suggest leaving a Metro region as the latency will have an affect on the responsiveness of the cluster.

- Geographic stretched cluster (cross country), while “remotely” possible, is considered recipe for failure as the network latency is way too high for most Cloud Paks. We would suggest a DR strategy for Geographic separation, however DR is not touched on here.

Nodes

- Bastion or jump server

1 - This should have access to the internet and can be used to house a mirrored container registry. - Bootstrap node

1 - This node can be repurposed for a worker/compute node. - Load balancer node

1 - This can be a hardware load balancer like F5 or can be a basic load balancer. - Controller/master node

3 - These are where the OpenShift’s cloud orchestration is executed. - Worker/compute node

3 or more> - These are where the Cloud Pak services run. -

Mirrored container registry node

1 - This could be on the bastion node. Once a Cloud Pak moves to an Operators architecture, the cluster needs access to a live container registry to pull containers. If you are in an air gapped or restricted network environment, the operators need to poll a repository. Warning: With out an available (live) registry it will fail to pull dynamic images like Jupyter runtimes.

Storage

- Do you need storage? Some Cloud Paks need a distributed file system to be able to share, recovery and have the pods as stateless as possible. Generally, the supported storage options are:

- NFS (Network Files Server)

- OCS (OpenShift Container Storage)

- PortWorx

- Storage Suite for Cloud Paks

- Cloud Storage options

- If you need storage, do you have this sized properly? Each storage vendor, Cloud Pak and each service based on usage can have certain prerequisites. Please review the Cloud Pak Documentation. Example: if you are hosting a database on a Cloud Pak, your usage will directly effect the amount of storage needed.

Cloud Pak Services

You should have an understanding of what Cloud Pak services you plan to provision. Each service may have different unique sizing and requirements, so please review the services that you plan to deploy also talking with the client on what they might use in the future. It is good to have a roadmap for what the clients will use. This way you can determine now if there is something that should be in place for expansion in the future.

| Cloud Pak | Services |

|---|---|

| Data | Data Services |

| Business Automation | Automation Services |

| Security | Security Services |

| Integration | Integration Services |

Prerequisites - Do you have everything you need to start

Install Entitlement - API Keys

Upon close of sale, your customer will receive 2 Proof of Entitlement (POE) emails. You will want to alert them that these will be coming and that you will need them to help them deploy their newly purchased platform.

These are sent to the “owner” of the deal upon completion to sale paperwork. This could be procurement, CIO, CEO, Manager. This could take up to 2 days, depending on situation. Please confirm with the person signing the purchase order.

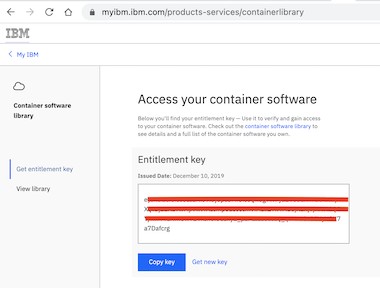

- IBM Cloud Pak Proof of Entitlement (POE) - This email comes directly from IBM. The person with access to PPA should also have the Containerized software listed under Library on MyIBM. They will use this APIKey to gain access to this entitled software. If there is any issue, client should contact paonline@ibm.com. This will provide entitlement for Cloud Pak for X.

If you want to find your entitlement keys for any Cloud Pak, here are the steps.

Steps to validate:- Verify that you have access to the entitlement registry.

- Log in with your My IBM ID.

- Copy Key on the Get entitlement key view.

- If there is no APIKey click on “Get new key”, this should generate a new APIKey.

- If there is no APIKey click on “Get new key”, this should generate a new APIKey.

- Click Library, it should list software entitled to access.

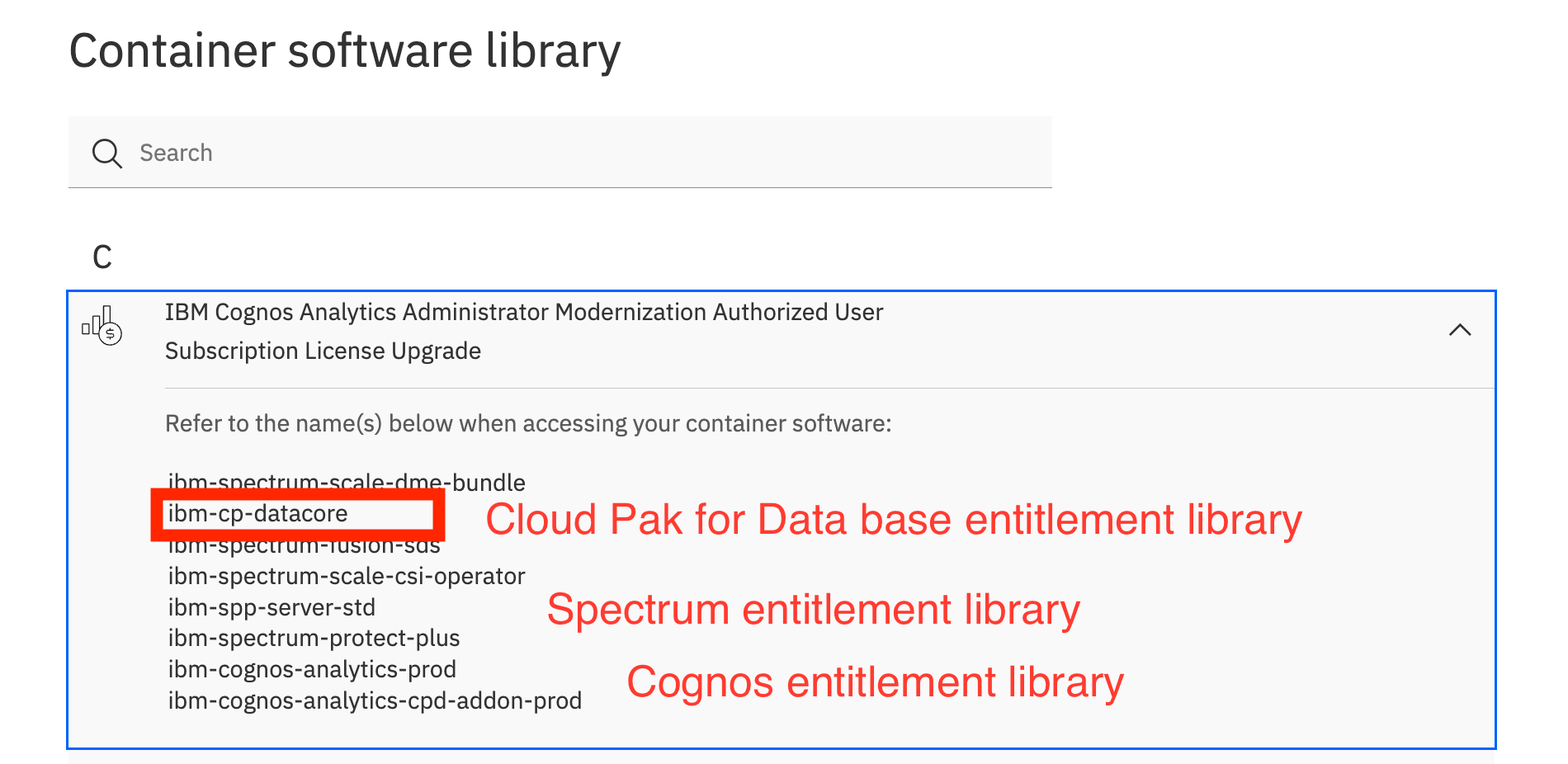

- For Partners using PartnerWorld Software Access Catalog, you should see the following:

- For Customers, you should see the following, after you expand the upside down carrot on the right. Here are some examples:

- Cloud Pak for Data, you will be looking for ibm-cp-datacore.

- Spectrum, you will be look for libraries staring with ibm-spectrum. Here is an image:

- For Partners using PartnerWorld Software Access Catalog, you should see the following:

- Red Hat Proof of Entitlement (POE) This email comes directly from Red Hat. This should appear under the subscriptions tab in the Redhat Subscription Manager.

If there is any issue, first check here for steps to resolve, otherwise have client should contact paonline@ibm.com. This will provide entitlement for OpenShift and Red Hat CoreOS/RHEL This POE will entitle you to a subscription to pull the software from the Red Hat Container repository.

This is not unlike YUM for RHEL, in fact you can pull down as RPMs. If you are entitled to OpenShift Container Storage, then this would be a similar process, except you would purchase the subscription directly from Red Hat.

If you are moving from a trial entitlement to a fully entitled entitlement and your cluster is already installed, you might be able to update the entitlement key for each machine config. The steps are laid out in this Redhat help document. Authentication needed to see document. - PortWorx Proof of Entitlement (POE) This should be available via Passport Advantage. If there is any issue, client should contact paonline@ibm.com.

Encryption

What level of encryptions does your client desire?

- Data at rest or in motion

Will the end client want to have their data at rest encrypted? If so will your storage support encryption and how? To encrypt the OpenShift storage, you will want to use Linux Unified Key Setup(LUKS) and/or Federal Information Processing Standards(FIPS). In OpenShift 4.3 and moving forward (4.6, 4.7, 4.8, 4.9), you can run OpenShift in FIPS Mode. You can also use LUKS to encrypt the volumes.

All this needs to be prepared prior to installing OpenShift.- Enabling LUKS at OS installation

- Enabling FIPS at installation and FIPS Support on OpenShift

If you are using Spectrum Scale, you can get encryption at at the file level using GPFS.

NOTE: Db2 data stores, in Cloud Pak for Data, have encryption enabled by default

-

Communications

SSL is your friend here, use it liberally.

NOTE: As encryption specifications evolve, you may need to disable certain one. For example TLS 1.0. To do this you will follow this document (requires RedHat subscription) to disable specs fro the HAProxy

Supported Hardware

| Cloud Pak | Supported Hardware Information |

|---|---|

| Data | Cloud Pak for Data Control Plane |

| Business Automation | Automation |

| Security | Security |

| Integration | Integration |

Supported Storage

| Cloud Pak | Supported Storage Information |

|---|---|

| Data | Cloud Pak for Data Control Plane |

| Business Automation | Automation |

| Security | Security |

| Integration | Integration |

Supported Software

| Cloud Pak | Supported Software Information |

|---|---|

| Data | Cloud Pak for Data Control Plane |

| Business Automation | Automation |

| Security | Security |

| Integration | Integration |

Install OpenShift

OpenShift Access to software

You will be downloading or pulling directly from Red Hat, so you will use the subscription information sent to the purchaser via email from Red Hat.

- The purchaser should activate their subscription from a 3rd party. This registers the entitled OpenShift license you get with Cloud Paks.

- Once the subscription is activated, you may need to grant or enable the cloud Provider to access your subscription if using a public cloud provider.

- Review you activated subscriptions. Click the one that was just activated. You should see something like Red Hat OpenShift Container Platform. This should list out the appropriate Yum repo label to get your software. Example: If you want REL 7 OCP 4.6 on Intel, then the label would be rhel-7-server-ose-4.6-rpms

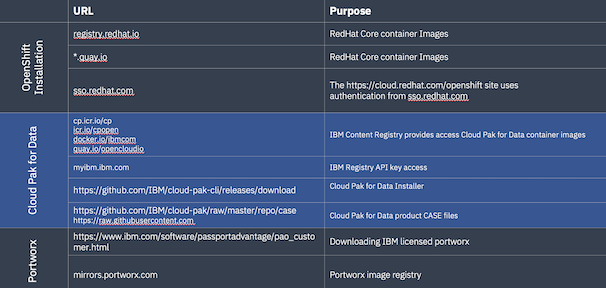

Firewall

Some implementations will be air gapped or semi air gapped (bastion/jump node is open to internet). Here are the port needed to be available for installing:

Installing OpenShift

Using Managed OpenShift

- Red Hat OpenShift on IBM Cloud (aka ROKS)

- Red Hat OpenShift Service Azure (ARO)

- Red Hat OpenShift Service on AWS (ROSA)

Upgrade Paths

Understand that Red Hat has a mantra of keeping current. Skipping version is not a good plan as of this writing. Here is a link to a upgrade graph. NOTE: You will need a Red Hat subscription to view this upgrade graph. Notice to get from 4.6 to 4.8, you will need to upgrade to 4.7. Staying current is important or upgrades of OpenShift can become difficult as not every OpenShift minor release can in place upgrade to the next major release.

Install Cloud Pak

| Cloud Pak | Control Plane | Services |

|---|---|---|

| Data | Data Control Plane | Data Services |

| Business Automation | - | Automation Services |

| Security | - | Security |

| Integration | - | Integration |

GitOps Deployments Guides

This is an effort on going to build out details GitOps deployment guides for the Cloud Paks. Not every Cloud Pak is covered yet here. This area may become hot in the coming year.

Problem Resolution

OpenShift CLI Primer

While we always want things to go smoothly and without error, there will be a time you need to dig under the covers using the OpenShift CLI or open a support ticket. While this is not an end all to be all for cli usage, there are some items that I find useful. Here is my OpenShift commands primer.

Troubleshooting

If something goes a miss, you can first check the documentation under troubleshooting. Support is trying to get all their findings into the documentation vs the support site.

Cloud Pak Troubleshooting Documentation

| Data | Automation | Integration | Security |

Contacting Support

If a Support ticket is needed, your customer can open a ticket from the IBM Support Website. For more details on registering and opening a ticket. (This is a separate product doc, so understand this when reading) You may want to open and or monitor tickets using your own id. There is a process to gain access. First is to get you access to the support system. Once you are in the system, your customer can grant you access to us their account.

- For the Business Partner to request access. This link will contact IBM PartnerWorld to verify their status and grant the partner access to IBM Service Request

- Have customer grant your id permissions to access to open and monitor their support tickets.

- If you can’t open the ticket, this link will help you find out why. This link for the partner to request research on why they cannot open a support ticket